Crowd Workers Are an Integral Piece of the Ethical AI Puzzle – Part 2

Building ethical AI isn’t a one-and-done checkbox-marking exercise – it’s a continual process made up of many nuanced considerations and decisions. It’s therefore not only our responsibility to build AI models that are robust to bias, but also to train and maintain them with unbiased, representative data to begin with. That’s why we must be mindful of our data collection practices and in particular, the role of crowd sourcing platforms in collecting that data.

In part two of our series on crowd work and its place in the ethical AI life cycle, we tackle the issue of pay, and why being ethical means changing the status quo in what and how we pay those that provide the lifeblood of our AI.

Crowd workers as cheap, scalable labor

Due to the immense data needs of machine learning models, and deep learning neural networks in particular, there has and always will be a critical need for cost-effective ways of generating that data quickly. Distributed, crowd-sourced data collection and annotation have traditionally been the answer, dividing the work into manageable chunks for hundreds or thousands of contributors to tackle piecemeal for small bounties. From the data requester’s side, it offers a flexible and economical on-demand workforce to generate that data.

Other benefits are the flexibility afforded to workers to do as much or as little of the work they decide to take on, affording a great deal of autonomy and the ability to treat crowd work as either part-time or full-time endeavors. For businesses, crowd work affords them a scalable data workforce capable of quick turnarounds without the massive overhead of employing the full-time staff that would otherwise be necessary to undertake such a large task.

At this point, astute observers will see that therein lies the problems with crowd work. Given that the roles aren’t traditional full- or part-time employment, crowd workers aren’t afforded many of the same benefits or labor protections, making steady work tenuous. Also, as the work leverages the internet, competition between workers can be fierce, driving down pay, with the idea being that there’s always someone willing to assume greater risks and lower pay than the next person.

This confluence of factors often results in poor work, plagued with quality and bias issues as workers divide their attention to juggle as many tasks as possible to maximize profit, or it attracts bad actors looking for as many quick, if minuscule, payouts as possible. For those that do devote their full attention and effort to tasks however, the pay becomes far too low to justify crowd working in the first place. The overall result of both issues is poor quality data, which in turn results in poor quality AI.

A high-profile example is that of ImageNet, the popular image dataset designed to train object recognition AI, which was collected and annotated on the Amazon Turk crowdsourcing platform. Thousands of images in the dataset were annotated with racist and misogynistic labels – a costly oversight to correct once finally caught, and a potentially disastrous one for models trained on similarly tainted data. This is doubly troubling considering just how popular the ImageNet dataset is in computer vision AI, as it’s one of the leading training and benchmarking datasets in the space.

A major contributor to the issue is the novelty of platform-based work in general. Already, inroads have been made to regulate platform work, such as with app-based ride-hailing services and food delivery. However, crowdsourced data work is still an outlier in this regard, further complicated by its obscurity to the general public who aren’t aware of its role in building the AI that they use, every day. As such, it’s difficult for policy makers to define this new, 21st century-style of work and what protections should be afforded to its workers while keeping it economically feasible for AI-powered businesses. That doesn’t mean that that regulation isn’t coming, however, and that businesses wouldn’t be wise to do all they can to both set standards and lead in this area, like Defined.ai has.

For its part, Defined.ai has gotten in front of these concerns by simply paying workers much more than they would normally receive on competing platforms. In fact, it’s Defined.ai’s stance to pay workers at least minimum wage in the regions that they operate from, with the majority of tasks paying higher than the local minimum wage of the regions for which they’re commissioned. This gives crowd workers the incentive and expectation to devote their full attention to their given tasks, addressing some of the aforementioned quality issues.

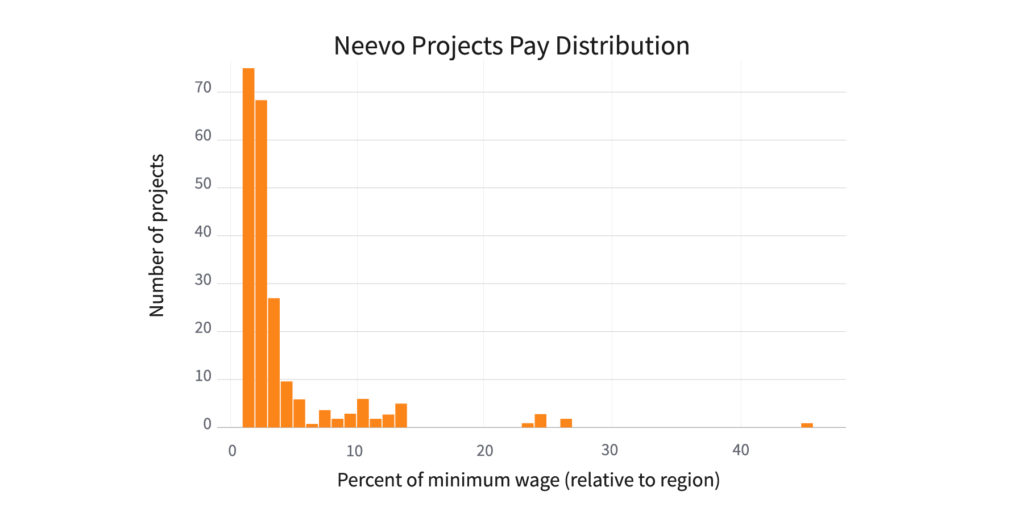

As noted in the plot above, the number of Neevo projects – which may contain anywhere from tens to thousands of crowd workers completing tasks for the project – paid at or above minimum wage is quite high, with the lion’s share of projects paying 1 – 3 times the minimum wage of the region that the task is requested in. As can also be seen in several cases, Neevo projects pay up to 45 times the minimum wage, mostly because minimum wage in those regions is considerably lower than minimum wage in other locales. No Neevo tasks are paid less than minimum wage.

While minimum wage as a concept can be a complex and sometimes controversial one given its politics and the varied costs of living even within the same country, Defined.ai’s attempts at improving crowd work pay while also targeting rates at or above minimum wage puts us within striking distance of achieving livable wages across the board, which is where we as an industry should be headed – the idea being that a crowd workforce that is paid well is better suited to producing the best results, while also encouraging their repeat participation.

If crowd workers produce the lifeblood of our AI, it’s up to us to reciprocate and provide the best support possible to our crowd workers.