The endless applications and possibilities of Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a type of deep learning algorithm that’s been gaining popularity due to their ability to generate high-quality, realistic images and other types of data. GANs consist of two networks—a generator and a discriminator—that compete to produce the most realistic data possible. The generator produces fake data, while the discriminator attempts to distinguish between real and fake data. This competition between the two networks ultimately leads to the generation of highly realistic data.

GANs in Data augmentation and Medical imaging

GANs are commonly utilized in data augmentation, which is the process of creating additional data for training other machine learning (ML) models. This technique is useful when data is scarce or costly, and where other ML models require large amounts of data to function effectively. For example, when training a model to accurately identify abnormalities in medical images such as MRIs or X-rays, having sufficient access to abnormal diagnostic imaging—whether for lack of resources or medical privacy laws—may be challenging. By utilizing GANs to generate additional data for training, medical ML models can be trained on larger and more diverse datasets, potentially leading to improved performance and better patient outcomes.

GANs in Drug discovery and development

GANs can also be applied to drug discovery by generating new molecular structures that exhibit similar properties to existing drugs. By training a GAN on a dataset of known drug molecules, the generator learns the patterns and features of the molecules in the dataset and can generate synthetic molecules with similar properties. These molecules can then be tested as novel drugs, potentially expediting drug discovery and improving their effectiveness and safety.

Other applications

Image and Video generation

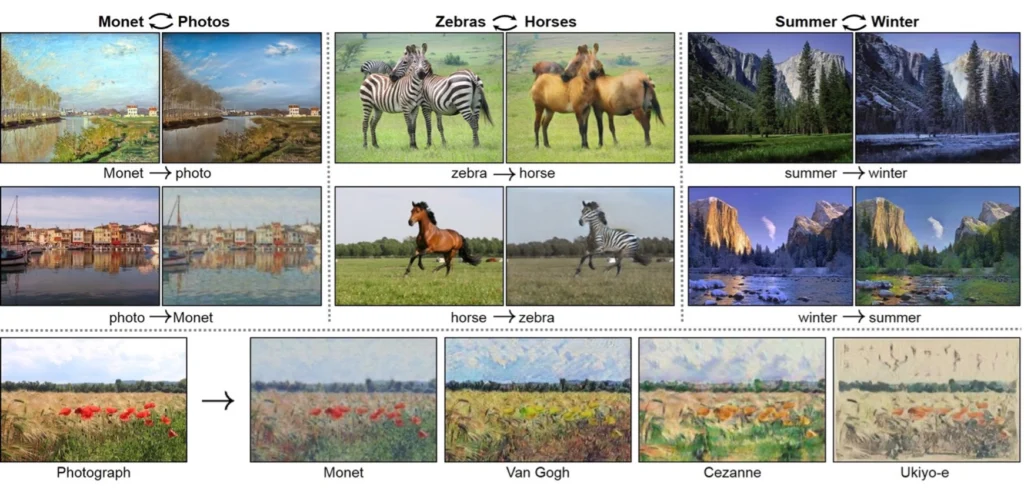

Other applications of GANs can be seen in image/video generation and “style transfer.” For example, the cleverly named GauGAN can take a sketch as input and generate a realistic image, with each color in the sketch representing a different class (e.g., sky, mountains, lake, etc. See below for examples of transferring style using GANs [1]).

Another example is the Super-Resolution GAN (SRGAN), which can recreate low-resolution images as high-resolution images that are more realistic than those produced by up-sampling. Additionally, GANs can be used to generate images of objects or scenes that do not exist in the real world, such as fantastical creatures or futuristic cities, which could be useful for creating visual effects or generating backgrounds for movies and video games. GANs can also be used for image filtering, such as adding makeup or altering a person’s appearance, which has potential for the beauty industry, where customers can try on makeup virtually before purchasing.

Audio generation

GANs can also be extended to other modalities, such as audio. One application is the generation of new music that is similar to existing music in its dataset. This has been used to generate new melodies, chord progressions, and even entire songs. For instance, a GAN trained on a jazz dataset can generate new jazz tracks, providing a useful tool for composers seeking inspiration or music producers looking to create music quickly and easily. Another musical application is music style transfer, where a GAN is trained to transfer the style of one piece of music to another—e.g., a GAN that can transfer the style of a classical composition to a pop song or vice versa. This can be useful for musicians exploring different styles and genres or for producers to create tracks incorporating elements from multiple styles.

Improving natural disaster responses and Climate Change awareness

It’s worth noting that GANs also have potential applications in improving the performance of early warning and disaster response systems. One challenge in this domain is the difficulty of collecting high-quality images of natural disasters, like hurricanes and floods, which can cause significant damage and loss of life. It can be hazardous to gather visual data of actual disaster sites, thus hindering the training and evaluation of disaster response systems. GANs could thus be utilized to generate synthetic images of disasters, which could be used for training and evaluating corresponding models. This could help improve the effectiveness of early warning and disaster response efforts, ultimately saving lives.

Additionally, GANs can be used to generate high-quality synthetic images of climate change effects, such as glacier melting and coastal flooding. If used in educational materials, public awareness campaigns, and other efforts to communicate the impacts of climate change to a broader audience, these synthetic images could raise awareness, potentially leading to more effective action to address climate change.

How can we help?

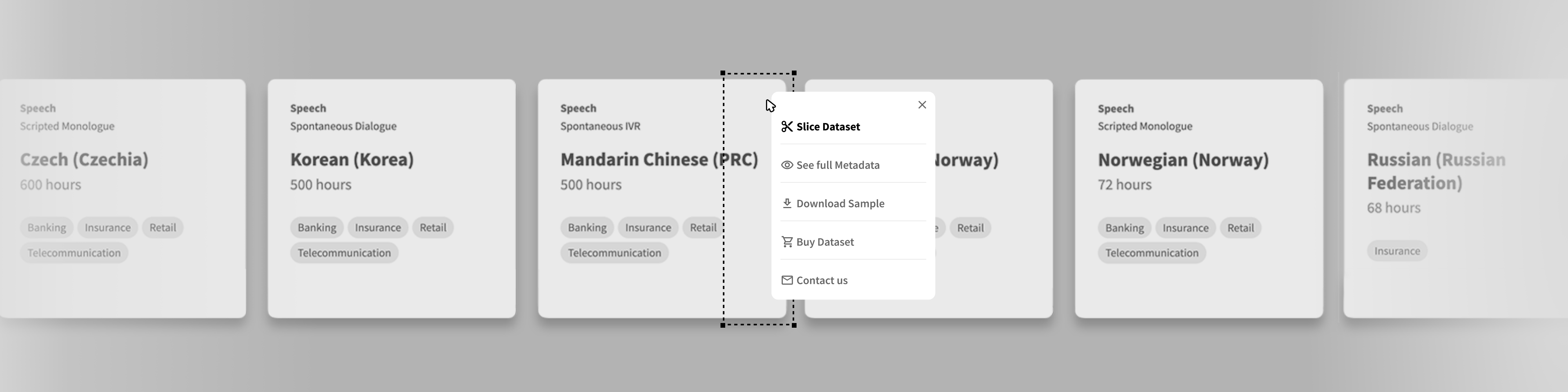

As such, Generative Adversarial Networks are invaluable deep learning algorithms with almost endless beneficial potential. Defined.ai can provide a wide range of high-quality, diverse text, images, videos, and audio datasets that can be used to train GAN models for a variety of tasks, such as audio synthesis, text style transfer, and chatbot response generation, to name a few.

For example, suppose you’re developing a GAN that can generate the voice of a company representative speaking in multiple languages for your website. In that case, Defined.ai can provide large and diverse training datasets of audio recordings in the requisite languages. Alternatively, if you are interested in a chatbot response generator, we can support you by providing those necessary training sets as well in either text or audio formats.

Whatever your application, get in touch today to learn how Defined.ai is your best resource for building robust and effective GAN projects.

References:

[1] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. “Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks,” in IEEE International Conference on Computer Vision (ICCV), 2017.